Cloud Infrastructure and Service Management full tutorials

Cloud Infrastructure and Service Management

Cloud Architect Salary Range in India

Entry-Level Cloud Architect (0–3 Years)

- Salary Range: ₹6,00,000 – ₹12,00,000 per year

- Monthly Range: ₹50,000 – ₹1,00,000

Mid-Level Cloud Architect (3–7 Years)

- Salary Range: ₹12,00,000 – ₹24,00,000 per year

- Monthly Range: ₹1,00,000 – ₹2,00,000

Senior Cloud Architect (7+ Years)

- Salary Range: ₹25,00,000 – ₹50,00,000+ per year

- Monthly Range: ₹2,00,000 – ₹4,00,000+

|

| CLOUD INFRASTRUCTURE |

Cloud Infrastructure, Deep Architecture, and Cloud Service Management:

Cloud Infrastructure and Deep Architecture

- Fundamentals of Cloud Computing

- Cloud Deployment Models (Public, Private, Hybrid, and Community)

- Cloud Service Models (IaaS, PaaS, SaaS)

- Virtualization Technologies

- Data Center Design and Architecture

- Scalability and Elasticity in Cloud

- Load Balancing in Cloud

- Cloud Storage Architectures

- Containerization and Orchestration (Docker, Kubernetes)

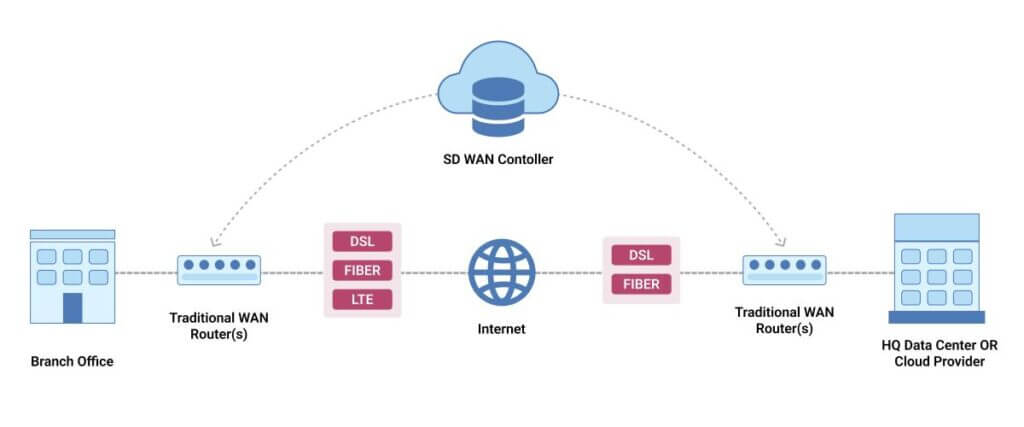

- Networking in Cloud (SDN, VPNs)

- Security in Cloud Infrastructure

- High Availability and Disaster Recovery

- Emerging Cloud Technologies (Edge Computing, Fog Computing)

- Serverless Computing

Cloud Service Management

LINK PART 2 : > Cloud Service Management

- Cloud Service Lifecycle

- Service Level Agreements (SLAs) in Cloud

- Cloud Governance and Compliance

- Billing and Cost Management in Cloud

- Cloud Monitoring and Analytics

- Resource Provisioning and Management

- Automation in Cloud Service Management

- Incident Management in Cloud

- Identity and Access Management (IAM)

- Cloud Vendor Management

- Cloud Migration Strategies

- Backup and Restore in Cloud

- Performance Optimization of Cloud Services

- Multi-Cloud and Hybrid Cloud Management

1 Introduction to Cloud Infrastructure and Service Management:

PART 1

Link : infrastructure

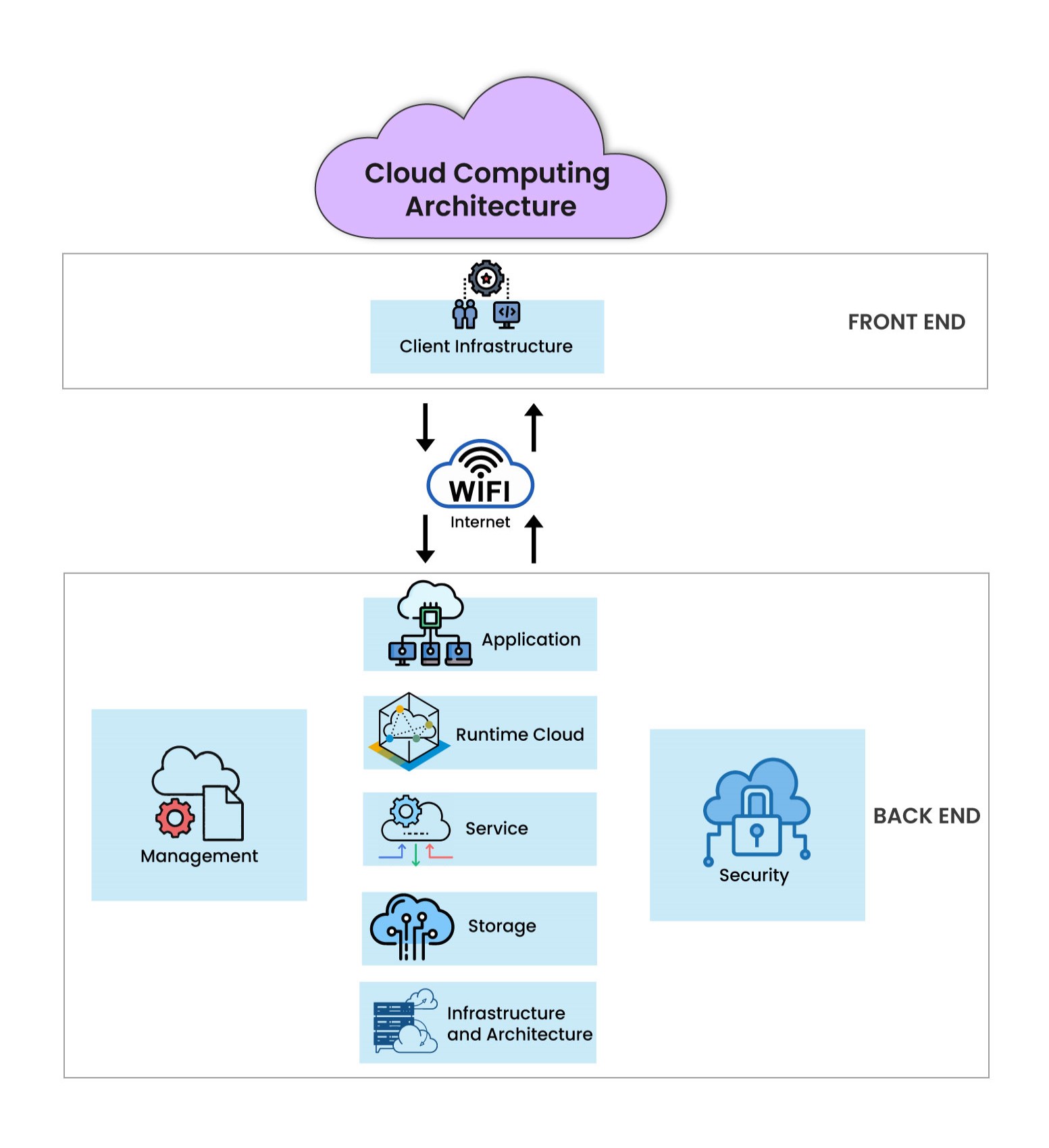

Cloud infrastructure and service management are critical components of modern cloud computing that enable organizations to deliver and manage services effectively over the internet. These components form the backbone of cloud technology, ensuring scalability, reliability, and cost efficiency.

Cloud Infrastructure: Definition and Explanation

Cloud Infrastructure refers to the combination of hardware, software, and networking resources that together provide the foundation for cloud computing. It enables the delivery of computing services over the internet, such as storage, processing power, and applications.

Key Components of Cloud Infrastructure

- Computing Resources: Virtual servers, containers, and virtual machines (VMs) that execute workloads.

- Storage Resources: Systems for storing data, such as object storage, block storage, and file storage.

- Networking Resources: Virtual networks, Software Defined Networking (SDN), and Virtual Private Networks (VPNs).

- Virtualization: Abstracting physical hardware into virtual resources for efficient utilization.

- Data Centers: Physical facilities housing servers, storage, and networking components.

Types of Cloud Infrastructure

- Public Cloud: Hosted by third-party providers (e.g., AWS, Azure).

- Private Cloud: Dedicated infrastructure for a single organization.

- Hybrid Cloud: Combines public and private clouds for flexibility.

- Community Cloud: Shared infrastructure for a specific community or industry.

Cloud Service Management: Definition and Explanation

Cloud Service Management involves overseeing and controlling the delivery, performance, and availability of cloud services. It ensures that services meet the agreed-upon standards and support organizational goals.

Key Aspects of Cloud Service Management

- Service Lifecycle Management: Managing services from inception to retirement.

- Monitoring and Analytics: Continuously tracking performance, usage, and availability.

- Cost Management: Optimizing resource allocation to control costs.

- Automation: Automating repetitive tasks to enhance efficiency.

- Compliance and Governance: Ensuring adherence to regulations and internal policies.

Key Activities in Cloud Service Management

- Provisioning: Allocating resources to users or applications.

- Incident Management: Resolving issues that impact service delivery.

- Performance Optimization: Fine-tuning services for better performance.

- Backup and Recovery: Ensuring data protection and service continuity.

- Identity and Access Management (IAM): Controlling access to resources.

Fundamentals of Cloud Computing

Definition & Characteristics:

- Delivery of computing services over the internet.

- Key traits: On-demand, Broad access, Resource pooling, Scalability, Measured usage.

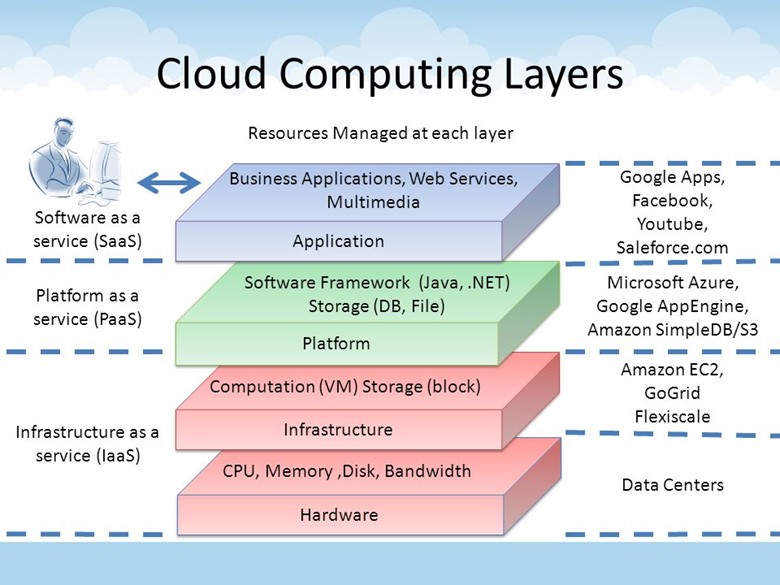

Service Models:

- IaaS: Infrastructure (e.g., AWS EC2).

- PaaS: Development platforms (e.g., Google App Engine).

- SaaS: Software delivery (e.g., Salesforce).

Deployment Models:

- Public Cloud: Shared services (e.g., Azure).

- Private Cloud: Dedicated for one organization.

- Hybrid Cloud: Mix of public & private.

- Community Cloud: Shared by specific groups.

Advantages:

- Cost-efficient, scalable, reliable, flexible, secure.

Risks:

- Data security, downtime, vendor lock-in, cost overruns.

Enabling Technologies:

- Virtualization, networking, automation, APIs.

Common Use Cases:

- Storage, app hosting, big data, AI, content delivery.

Security Principles:

- Authentication, encryption, compliance.

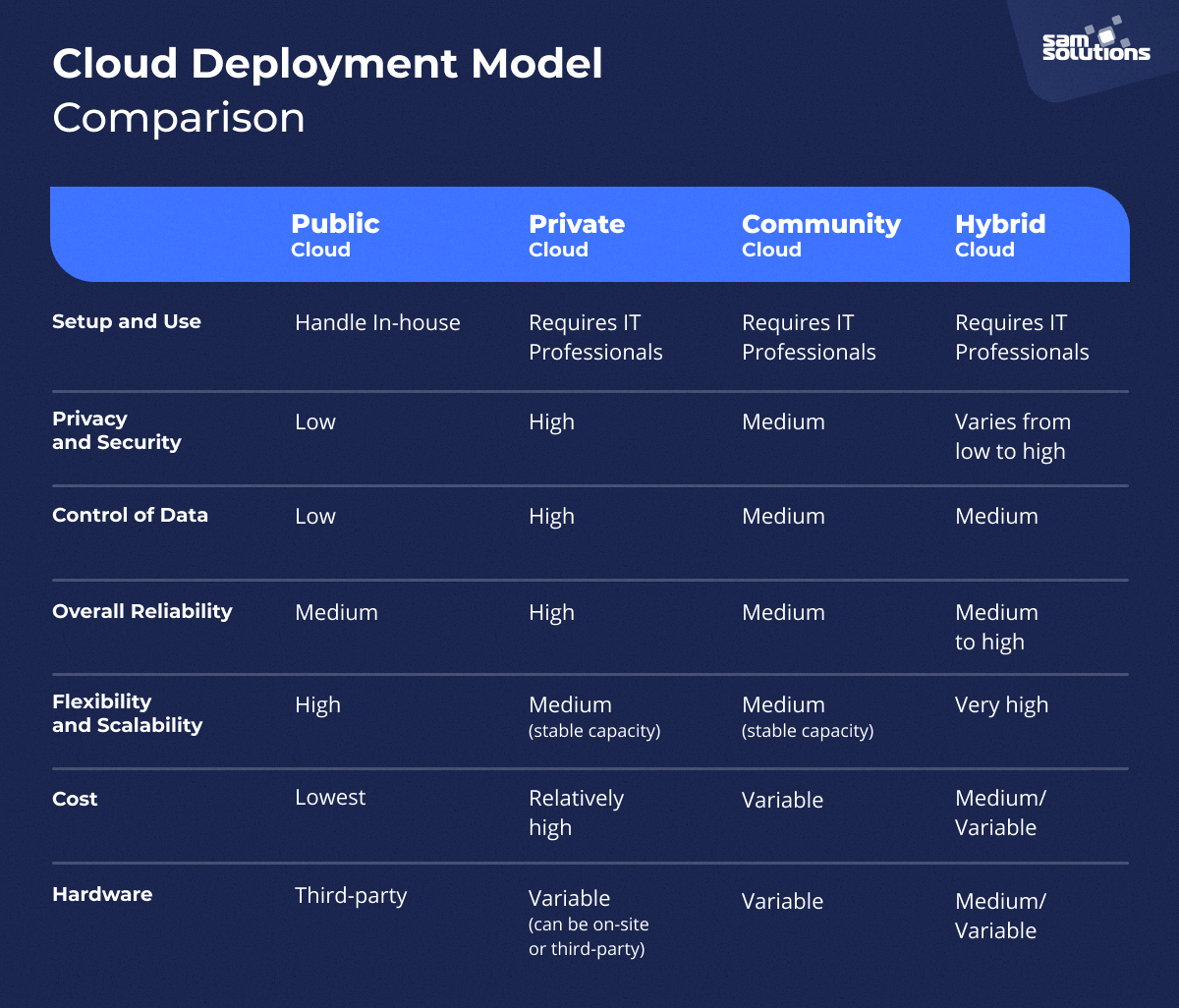

2 Cloud Deployment Models: Key Points

Public Cloud

- Shared Resources: Accessible by multiple users via the internet.

- Cost: Pay-as-you-go, low maintenance.

- Examples: AWS, Azure, Google Cloud.

Private Cloud

- Exclusive Use: Dedicated to one organization.

- Benefits: Enhanced security, customizable.

- Cost: Higher due to maintenance and setup.

Hybrid Cloud

- Combination: Mix of public and private clouds.

- Key Feature: Flexibility with data and scalability.

- Use Case: Balancing sensitive data with public resources.

Community Cloud

- Shared Purpose: Infrastructure for specific industries/groups.

- Focus: Compliance and cost-sharing.

- Examples: Healthcare or finance sectors.

3 Cloud Service Models (IaaS, PaaS, SaaS)

1. Infrastructure as a Service (IaaS)

- Definition: Provides virtualized computing resources like servers, storage, and networking.

- Key Terms:

- Virtual Machines (VMs): Compute power on demand.

- Scalability: Adjust resources as needed.

- Examples: AWS EC2, Microsoft Azure Virtual Machines, Google Compute Engine.

- Use Case: Hosting websites, data storage, testing environments.

2. Platform as a Service (PaaS)

- Definition: Offers a platform for developers to build, test, and deploy applications.

- Key Terms:

- Development Tools: Frameworks and APIs for coding.

- Managed Environment: No need to manage underlying infrastructure.

- Examples: AWS Elastic Beanstalk, Google App Engine, Microsoft Azure App Service.

- Use Case: Application development, streamlining deployment.

3. Software as a Service (SaaS)

- Definition: Delivers software applications over the internet on a subscription basis.

- Key Terms:

- On-Demand Software: Ready-to-use applications.

- Accessibility: Use via browsers without installation.

- Examples: Gmail, Microsoft Office 365, Salesforce.

- Use Case: Email, customer relationship management (CRM), collaboration tools.

4 Virtualization Technologies

Link : VM

1. VMware vSphere/ESXi

- Overview: VMware vSphere, powered by ESXi, is a leading enterprise-level virtualization platform.

- Key Features:

- High scalability and performance.

- Advanced management tools like vCenter.

- Supports hybrid cloud integration.

- Best Use Cases: Data centers, enterprise IT environments, and cloud infrastructure.

2. Microsoft Hyper-V

- Overview: Built into Windows Server, Hyper-V is Microsoft's solution for virtualization.

- Key Features:

- Seamless integration with Windows and Azure.

- Live Migration for minimal downtime.

- Robust disaster recovery features.

- Best Use Cases: Windows-based environments and hybrid cloud solutions.

3. Oracle VM VirtualBox

- Overview: A free and open-source virtualization platform for personal and enterprise use.

- Key Features:

- Cross-platform compatibility.

- Supports a wide variety of guest operating systems.

- Easy to set up and use.

- Best Use Cases: Development, testing environments, and lightweight virtualization needs.

4. KVM (Kernel-Based Virtual Machine)

- Overview: An open-source virtualization technology built into Linux.

- Key Features:

- Native Linux kernel integration.

- Excellent performance for Linux workloads.

- Supports a broad range of guest operating systems.

- Best Use Cases: Linux-based servers and open-source ecosystems.

---------------------------------------------------------------------------------------------------------------------------------

Citrix XenServer

- Xen Hypervisor: Robust and mature virtualization core.

- Enterprise-Grade: Suitable for large organizations.

- Advanced Management: Tools for automation and orchestration.

- High Performance: Optimized for both Windows and Linux VMs.

- Security Features: Enhanced isolation and protection mechanisms.

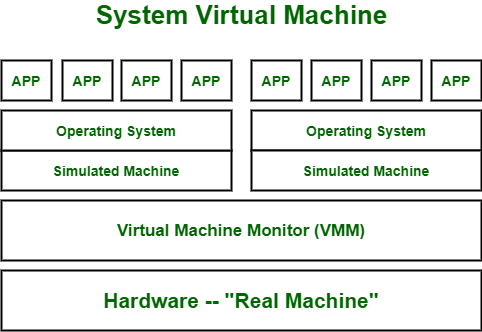

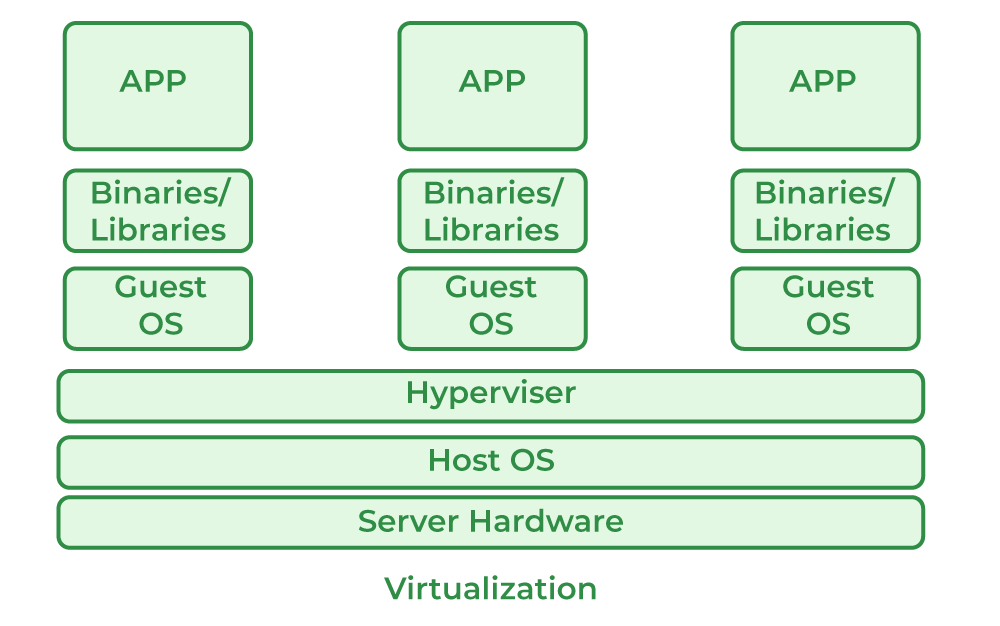

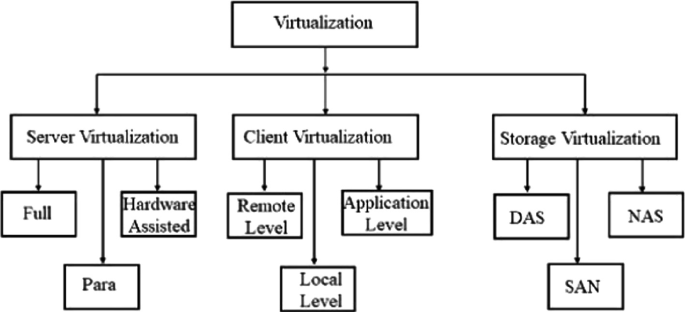

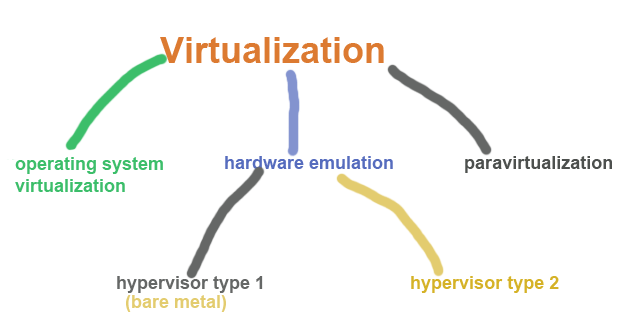

Virtualization Definition

Virtualization is the process of creating virtual versions of physical IT resources like servers, storage, networks, or operating systems, improving resource utilization, scalability, and flexibility.

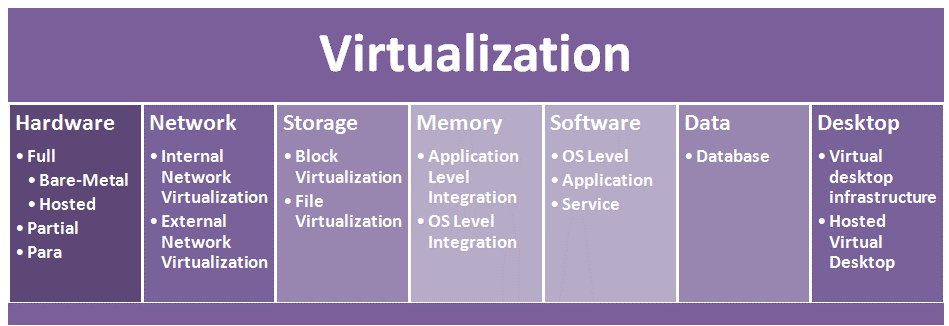

Types of Virtualization (Short)

- Server Virtualization: Splits one physical server into multiple virtual servers.

- Desktop Virtualization: Centralized virtual desktops accessible remotely.

- Network Virtualization: Combines or segments networks virtually.

- Storage Virtualization: Pools multiple storage devices into one virtual storage.

- Application Virtualization: Runs applications in isolated virtual environments.

- OS Virtualization: Runs multiple OS instances on a single system (e.g., containers).

- Hardware Virtualization: Virtualizes physical hardware for multiple OS use.

- Data Virtualization: Creates a unified virtual view of data from various sources.

5 Data Center Design and Architecture

LINK = Data Center

1. Tier Classification

- Overview: Data centers are classified into four tiers (Tier I to Tier IV) by the Uptime Institute, based on redundancy, uptime, and fault tolerance.

- Tier I: Basic infrastructure, 99.671% uptime, no redundancy.

- Tier II: Redundant capacity components, 99.741% uptime.

- Tier III: Concurrently maintainable, 99.982% uptime.

- Tier IV: Fault-tolerant, 99.995% uptime.

- Why Important?: Determines reliability and the ability to meet SLAs (Service Level Agreements).

2. Cooling Systems and Environmental Control

- Cooling Technologies:

- CRAC Units (Computer Room Air Conditioners): For precise cooling.

- Hot Aisle/Cold Aisle Containment: Improves airflow efficiency.

- Liquid Cooling: For high-density servers.

- Environmental Monitoring:

- Temperature, humidity, and airflow sensors.

- Why Important?: Effective cooling ensures server longevity and prevents downtime due to overheating.

3. Redundancy and Power Management

- Key Concepts:

- Uninterruptible Power Supply (UPS): Provides backup power during outages.

- Generators: For long-term power redundancy.

- Dual Power Supplies: Ensures continuous operation for critical systems.

- N+1 Redundancy: One extra unit for backup (e.g., power, cooling).

- Why Important?: Ensures continuous operation and avoids single points of failure.

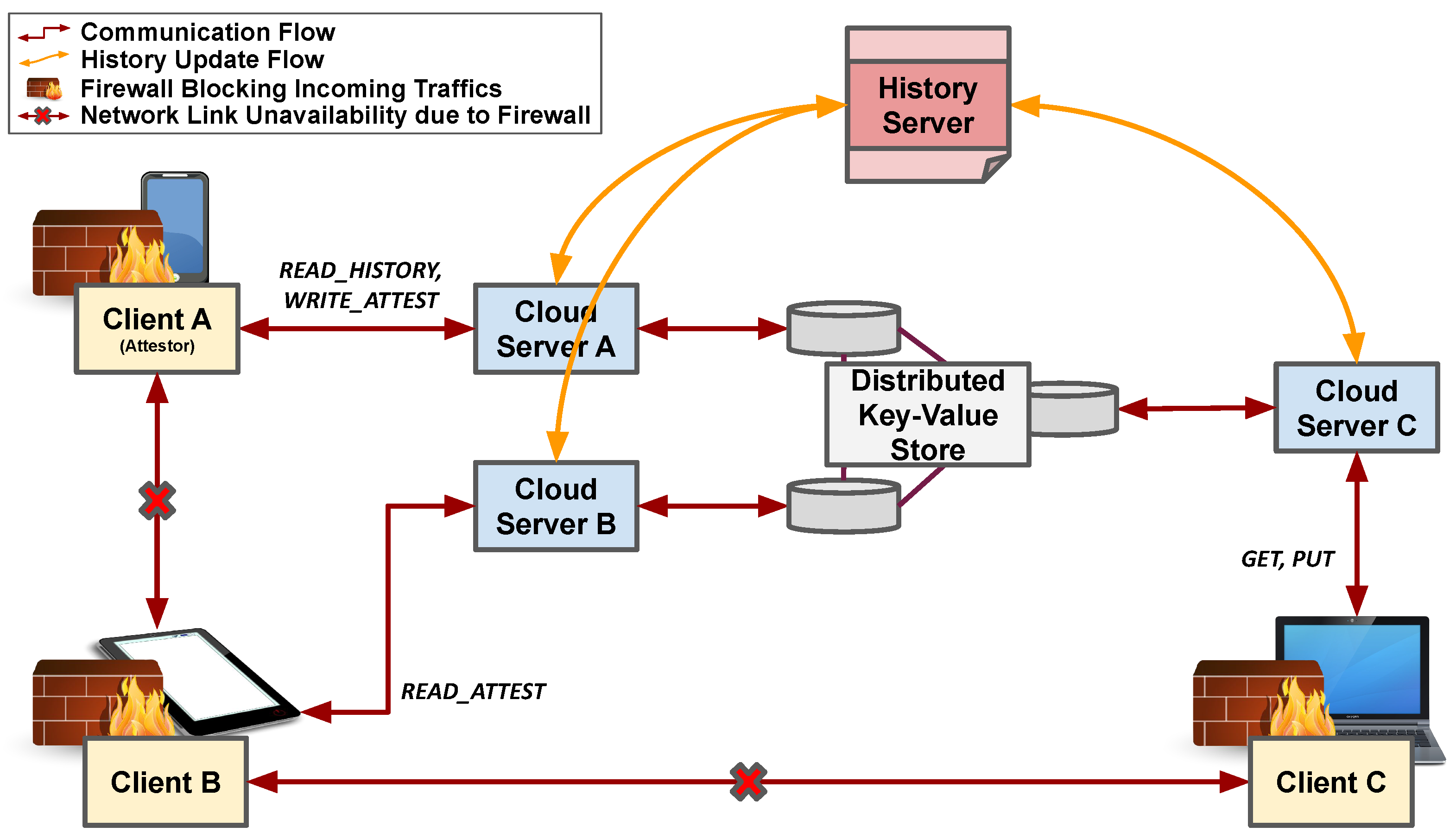

4. Networking and Connectivity

- Design Principles:

- High Bandwidth and Low Latency: For faster data transmission.

- Redundant Network Links: To prevent outages due to link failure.

- Software-Defined Networking (SDN): Increases flexibility and efficiency.

- Firewall and Security Protocols: To protect against cyber threats.

- Why Important?: Maintains uninterrupted connectivity and secure communication.

5. Scalability and Modular Design

- Modular Data Centers:

- Components like power, cooling, and IT infrastructure are modular.

- Easily expandable as demand grows.

- Space Utilization:

- Efficient rack placement for maximum server density.

- Flexible floor designs for future growth.

- Why Important?: Adapts to business growth while optimizing costs and resources.

feature |

Benefit |

|

Priority-based Flow Control (PFC) (IEEE 802.1 Qbb) |

Provides the capability to manage a bursty, single-traffic source on a multiprotocol link |

|

Enhanced Transmission Selection (ETS) (IEEE 802.1 Qaz) |

Enables bandwidth management between traffic types for multiprotocol links |

|

Congestion Notification (IEEE 802.1 Qau) |

Addresses the problem of sustained congestion by moving corrective action to the network edge |

|

Data Center Bridging Exchange (DCBX) Protocol |

Allows autoexchange of Ethernet parameters between switches and endpoints |

6 Scalability and Elasticity in Cloud

Scalability vs. Elasticity in Cloud Computing

1. Scalability

- Definition: Ability to handle increased workloads by adding or removing resources.

- Types:

- Vertical (Scaling Up/Down): Increasing resource size (e.g., upgrading a server).

- Horizontal (Scaling Out/In): Adding or removing resource instances (e.g., adding servers).

- Use Case: Long-term growth (e.g., expanding database capacity).

2. Elasticity

- Definition: Dynamic adjustment of resources in real-time based on demand.

- Key Feature: Automatic scaling without manual intervention.

- Use Case: Short-term spikes (e.g., traffic surge during sales).

Difference

| Aspect | Scalability | Elasticity |

|---|---|---|

| Nature | Planned growth | Real-time adjustment |

| Example | Adding servers over time | Auto-scaling for traffic |

Summary:

- Scalability: For planned capacity.

- Elasticity: For unpredictable demand.

Both are essential for cost-effective and reliable cloud solutions.

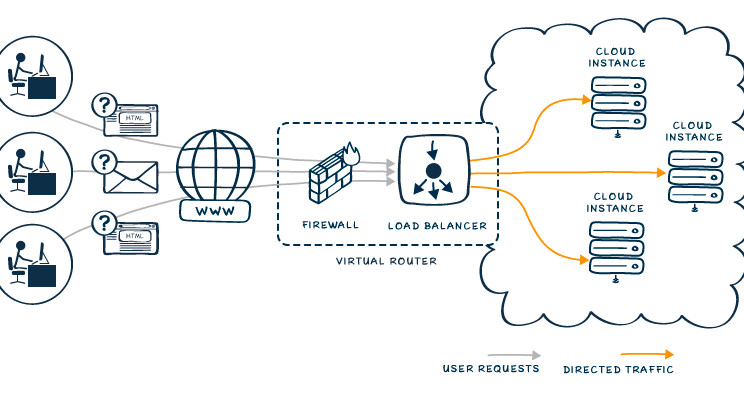

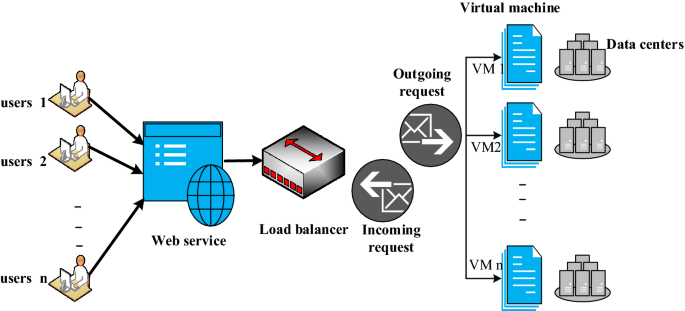

7 Load Balancing in Cloud

7 Load Balancing in Cloud Computing

Load balancing is a critical concept in cloud computing, ensuring the efficient distribution of workloads across multiple servers, resources, or instances to optimize performance, scalability, and availability.

1. What is Load Balancing?

- Definition: Load balancing distributes incoming network traffic or computational tasks across multiple servers or resources to ensure no single resource is overwhelmed.

- Goal: Maintain high availability, reliability, and performance.

2. Importance of Load Balancing

- Improved Performance: Distributes tasks to prevent bottlenecks.

- High Availability: Ensures continuous service even if one server fails.

- Scalability: Supports growth by managing traffic across scaled resources.

- Cost Efficiency: Optimizes resource usage to avoid overprovisioning.

3. Key Types of Load Balancing

- Hardware Load Balancers:

- Physical devices that distribute traffic.

- Used in traditional data centers.

- Software Load Balancers:

- Runs on virtual machines.

- Example: HAProxy, NGINX.

- Cloud Load Balancers:

- Fully managed by cloud providers.

- Example: AWS Elastic Load Balancer, Azure Load Balancer.

4. Algorithms Used in Load Balancing

- Round Robin: Distributes traffic evenly across servers.

- Least Connections: Sends traffic to the server with the fewest connections.

- Weighted Round Robin: Assigns more traffic to higher-capacity servers.

- Geolocation-Based: Routes users to servers closest to their location.

5. Features of Cloud Load Balancers

- Auto-Scaling Integration: Automatically adjusts server capacity based on traffic.

- Health Monitoring: Redirects traffic away from unhealthy servers.

- Global Distribution: Supports geographically distributed users.

- Security: Includes firewalls, SSL termination, and DDoS protection.

6. Real-World Use Cases

- E-Commerce: Handles traffic spikes during sales.

- Video Streaming: Distributes video content efficiently.

- Web Applications: Ensures high availability for multi-region deployments.

7. Examples of Cloud Load Balancers

- AWS Elastic Load Balancer (ELB): Supports multiple protocols (HTTP, HTTPS, TCP).

- Azure Load Balancer: Offers public and internal load balancing.

- Google Cloud Load Balancer: Provides global load balancing for real-time applications.

Conclusion

Load balancing is vital for ensuring scalability, performance, and availability in cloud systems. It optimizes resource usage, improves user experience, and helps maintain service reliability, making it a key topic for exams and cloud architecture.

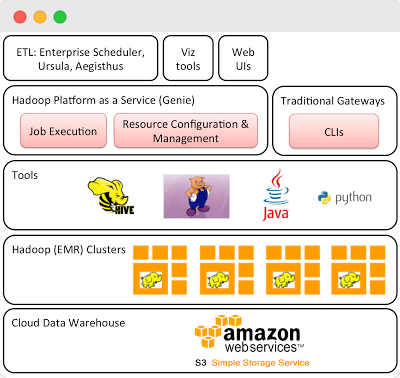

8 Cloud Storage Architectures

Cloud Storage Architectures

Cloud storage architectures define how data is stored, accessed, managed, and secured in cloud environments. Understanding these architectures is critical for exams and real-world applications as they address performance, scalability, and cost-efficiency.

1. Key Types of Cloud Storage Architectures

1.1 Object Storage

- Definition: Stores data as objects in a flat structure, where each object contains the data, metadata, and a unique identifier.

- Key Features:

- Unlimited scalability.

- Accessible via APIs (e.g., REST, S3).

- Ideal for unstructured data.

- Use Cases:

- Storing media files, backups, and big data analytics.

- Example: Amazon S3 used by Netflix for storing video content.

1.2 Block Storage

- Definition: Divides data into fixed-sized blocks, each with its own address but no metadata.

- Key Features:

- High performance for I/O-intensive workloads.

- Works like traditional storage for operating systems.

- Use Cases:

- Databases, virtual machines, and enterprise applications.

- Example: Amazon EBS used for virtual machine disk storage.

1.3 File Storage

- Definition: Stores data in a hierarchical structure with directories and files, similar to traditional file systems.

- Key Features:

- Shared access and compatibility with legacy applications.

- Network-based access via protocols like NFS and SMB.

- Use Cases:

- Content repositories and shared drives.

- Example: Azure Files used for shared access in team environments.

2. Cloud Storage Deployment Models

2.1 Public Cloud Storage

- Managed by third-party providers; accessible via the internet.

- Example: Google Cloud Storage for hosting application data.

2.2 Private Cloud Storage

- On-premises or dedicated infrastructure managed privately.

- Example: OpenStack Swift used in enterprise private clouds.

2.3 Hybrid Cloud Storage

- Combines public and private cloud for flexibility.

- Example: AWS Storage Gateway bridges on-premises storage with S3.

2.4 Multi-Cloud Storage

- Uses multiple cloud providers to avoid vendor lock-in and improve redundancy.

- Example: A company using both AWS S3 and Azure Blob Storage.

3. Real-Life Examples

Netflix

- Uses Amazon S3 for object storage to store video files and distribute content globally to millions of users.

Dropbox

- Migrated to a custom hybrid cloud storage architecture using Object Storage for scalability and cost control.

Airbnb

- Relies on Amazon EBS for block storage to power its database and application workloads, ensuring high performance.

4. Key Design Considerations

- Scalability: Object storage for infinite scalability (e.g., backups, media files).

- Performance: Block storage for high-speed transactions (e.g., databases).

- Compatibility: File storage for applications requiring shared access.

- Cost: Object storage is cost-effective for large datasets.

Conclusion

Cloud storage architectures—object, block, and file storage—serve different purposes based on performance, scalability, and use cases. Real-life applications like Netflix's S3 usage and Airbnb's reliance on EBS highlight their practical importance in cloud computing.

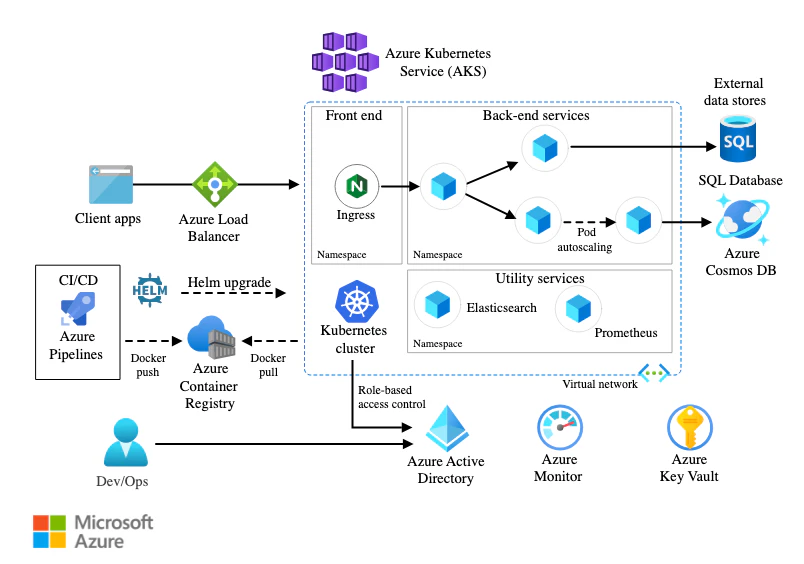

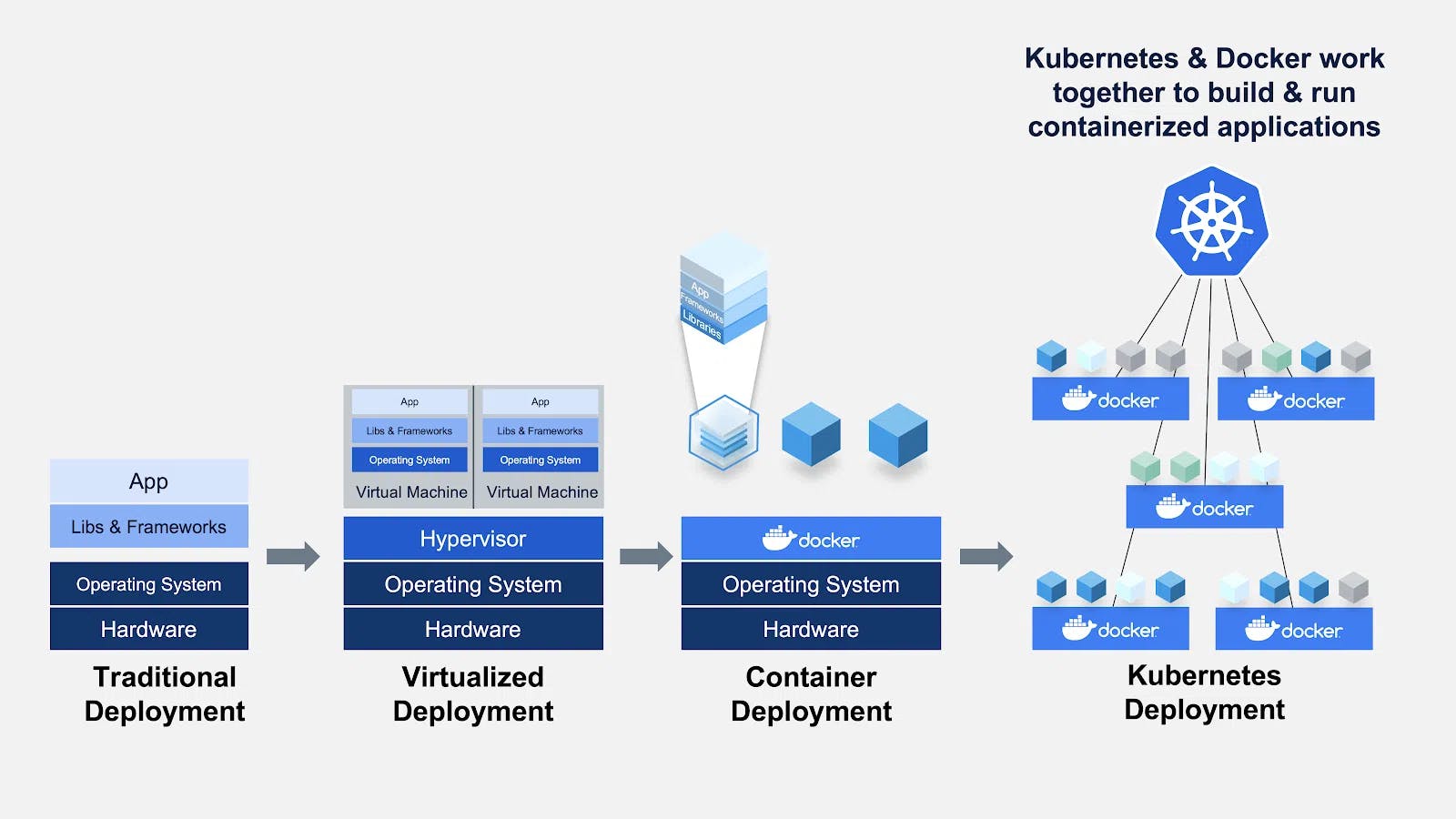

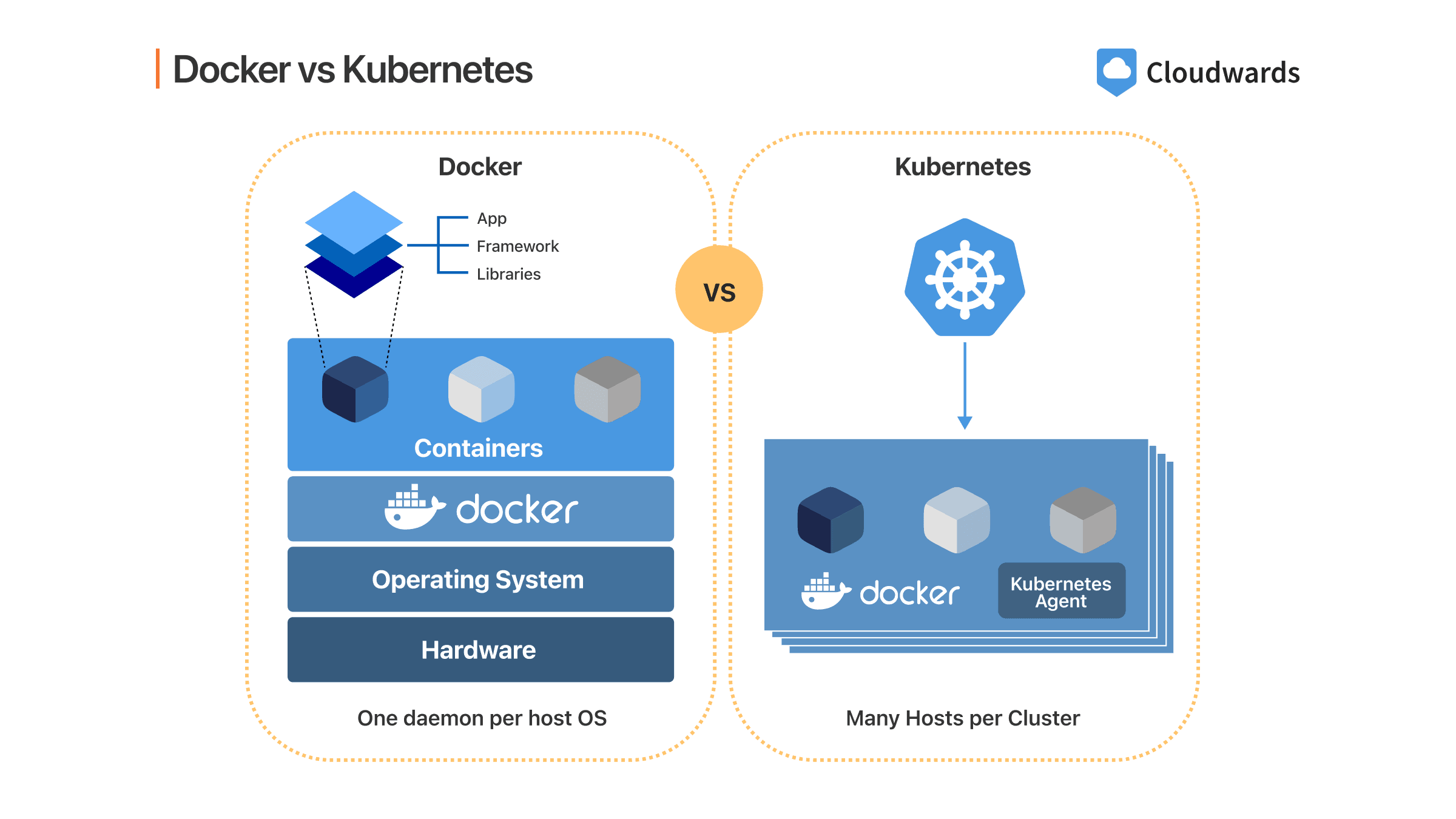

9 Containerization and Orchestration (Docker, Kubernetes)

Containerization and Orchestration: Docker & Kubernetes

Containerization and orchestration are pivotal in modern software development, enabling rapid deployment, scalability, and efficient resource utilization. Understanding Docker and Kubernetes is crucial for exams and practical applications.

1. Containerization

- Definition: The process of packaging applications and their dependencies into lightweight, portable containers that run consistently across environments.

- Key Tool: Docker.

Features of Docker:

- Lightweight: Containers share the host OS kernel, reducing overhead compared to virtual machines.

- Portability: Containers work across different environments (development, testing, production).

- Isolation: Applications run in isolated environments to avoid conflicts.

- Version Control: Tracks and manages changes with Docker images.

Use Cases:

- Application development and deployment.

- Microservices architecture.

- DevOps pipelines.

Real-Life Example:

- Spotify: Uses Docker to deploy microservices efficiently, enabling consistent development and testing environments.

2. Orchestration

- Definition: The management of multiple containers in a distributed environment, ensuring high availability, scalability, and automated deployment.

- Key Tool: Kubernetes.

Features of Kubernetes:

- Cluster Management: Manages multiple nodes in a container cluster.

- Scaling: Automatically scales containers based on traffic or resource needs.

- Self-Healing: Restarts failed containers or replaces unresponsive nodes.

- Load Balancing: Distributes traffic across containers for optimal performance.

- Declarative Management: Uses YAML files to define the desired state of an application.

Use Cases:

- Managing large-scale microservices.

- Automating container deployment and scaling.

- Ensuring high availability and fault tolerance.

Real-Life Example:

- Google: Kubernetes, originally developed at Google, powers its internal infrastructure, handling billions of container deployments daily.

3. Comparison of Docker and Kubernetes

| Aspect | Docker | Kubernetes |

|---|---|---|

| Focus | Container creation and management. | Orchestrating containers at scale. |

| Scope | Single-node containerization. | Multi-node orchestration. |

| Automation | Limited to container builds. | Automated scaling and deployment. |

| Use Case | Building and running applications. | Managing large-scale clusters. |

4. Benefits of Containerization and Orchestration

- Scalability: Scale services up or down based on demand.

- Portability: Move containers across cloud providers or on-premises setups.

- Cost Efficiency: Optimize resource usage with lightweight containers.

- Reliability: Kubernetes ensures applications remain operational through self-healing and failover mechanisms.

5. Real-Life Combined Example

- Netflix:

- Uses Docker to containerize its microservices for easy deployment.

- Leverages Kubernetes to manage thousands of containers, ensuring high availability during peak streaming periods.

Conclusion

- Docker simplifies application development with containerization, while Kubernetes orchestrates containers for large-scale deployments.

- Together, they enable agile, scalable, and reliable software delivery, making them essential for cloud-native architecture and DevOps practices.

NOTE :-

As of March 2024, the distribution of data centers by country is as follows:

| Country | Number of Data Centers |

|---|---|

| United States | 5,381 |

| Germany | 521 |

| United Kingdom | 514 |

| China | 449 |

| Canada | 336 |

| France | 315 |

| Australia | 307 |

| Netherlands | 297 |

| Russia | 251 |

| Japan | 219 |

| Mexico | 170 |

| Italy | 168 |

| Brazil | 163 |

| India | 152 |

| Poland | 144 |

| Spain | 143 |

| Hong Kong | 122 |

| Switzerland | 120 |

| Singapore | 99 |

| Sweden | 95 |

| New Zealand | 81 |

| Indonesia | 79 |

| Belgium | 79 |

| Austria | 68 |

| Ukraine | 58 |

These figures are sourced from Statista.

Please note that the total number of data centers worldwide is continually changing due to new constructions and decommissioning of older facilities.

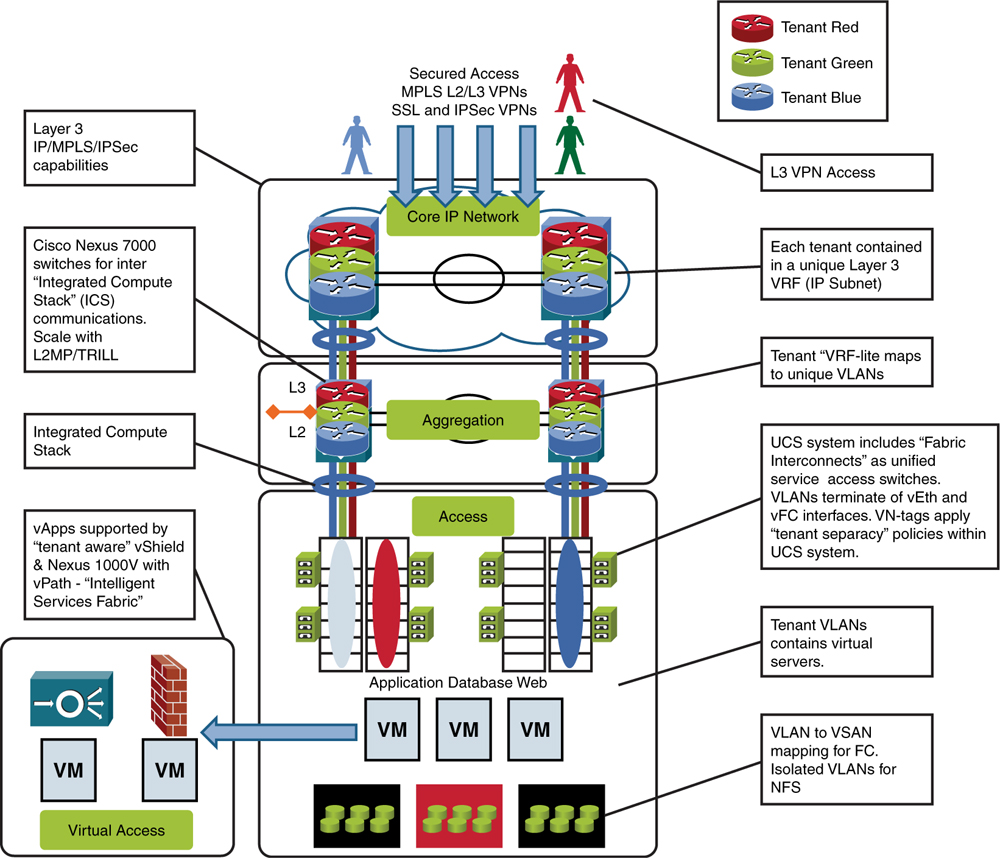

10 Networking in Cloud (SDN, VPNs)

LINK : SDN & NFV: Moving the Network into the Cloud

Networking in Cloud (SDN, VPNs)

Networking is a critical aspect of cloud computing, enabling the communication between servers, applications, and users. Understanding Software-Defined Networking (SDN) and Virtual Private Networks (VPNs) is essential for cloud-based systems. Below is an overview of these technologies, their importance, and real-life examples.

1. Software-Defined Networking (SDN)

Definition:

- SDN is an approach to networking where the control plane (decisions about traffic routing) is decoupled from the data plane (actual forwarding of traffic).

- It enables centralized control of the network, making it more flexible, programmable, and automated.

Key Features:

- Centralized Control: SDN allows for a single point of control (software-based) to manage the entire network.

- Programmability: Network administrators can programmatically manage, configure, and monitor the network using software applications.

- Agility: Quick and easy modification of network configurations to meet changing demands.

- Cost Efficiency: Reduces hardware dependency and simplifies management.

Use Cases:

- Data Center Networking: Simplifies network configuration and optimization.

- Cloud Networking: Enables automated provisioning and scaling of cloud services.

- Network Security: Provides fine-grained control over network traffic.

Real-Life Example:

- Google: Uses SDN to optimize the internal networking of its cloud infrastructure. SDN allows them to scale efficiently, control traffic, and adjust network configurations dynamically.

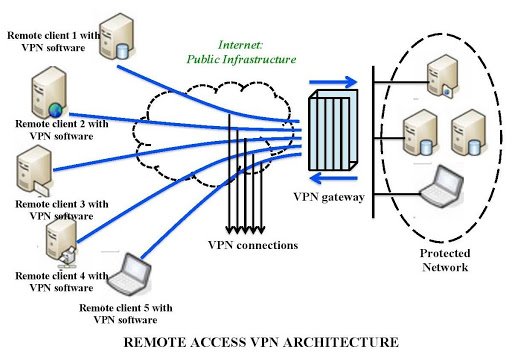

2. Virtual Private Network (VPN)

Definition:

- VPN is a secure, encrypted connection between two or more networks over the internet. It enables private communication over public networks, ensuring data privacy and security.

- Commonly used in cloud environments to securely connect remote offices, users, or cloud services.

Key Features:

- Encryption: VPNs encrypt data, ensuring that sensitive information remains secure during transmission.

- Remote Access: Enables users to access cloud resources securely from anywhere.

- Site-to-Site Connection: Connects different data centers or office networks over a secure tunnel.

- Privacy: Hides the user's IP address, improving privacy and preventing tracking.

Use Cases:

- Secure Remote Access: Employees accessing corporate applications securely from remote locations.

- Site-to-Site VPN: Connecting branch offices or remote data centers securely over the internet.

- Cloud Security: Ensuring secure communication between on-premises infrastructure and cloud services.

Real-Life Example:

- Amazon Web Services (AWS): Uses VPNs to securely connect their cloud services to on-premises data centers and remote clients. AWS provides users with AWS Site-to-Site VPN to establish secure, encrypted tunnels for hybrid cloud connectivity.

3. SDN vs. VPN in Cloud Networking

| Aspect | SDN (Software-Defined Networking) | VPN (Virtual Private Network) |

|---|---|---|

| Functionality | Centralizes and automates network management | Provides secure and private communication |

| Primary Use | Network optimization, automation, and flexibility | Secure remote access, site-to-site connections |

| Key Benefit | Agility and scalability for cloud infrastructure | Security and privacy for data in transit |

| Example | Google’s SDN to control internal cloud traffic | AWS VPN connecting cloud to on-prem systems |

4. Real-Life Use Cases in Cloud Networking

Google Cloud:

- SDN: Google Cloud uses SDN to automate and optimize their network, ensuring low-latency access to cloud services. SDN helps Google scale their infrastructure dynamically, responding to changing workloads efficiently.

AWS (Amazon Web Services):

- VPN: AWS allows users to securely connect their on-premises data centers to AWS via AWS Site-to-Site VPN, which ensures encrypted traffic flows between on-prem and cloud services.

Microsoft Azure:

- SDN & VPN: Azure integrates SDN for network automation and performance, while VPN technologies (like Azure VPN Gateway) provide secure communication between Azure resources and on-premises networks.

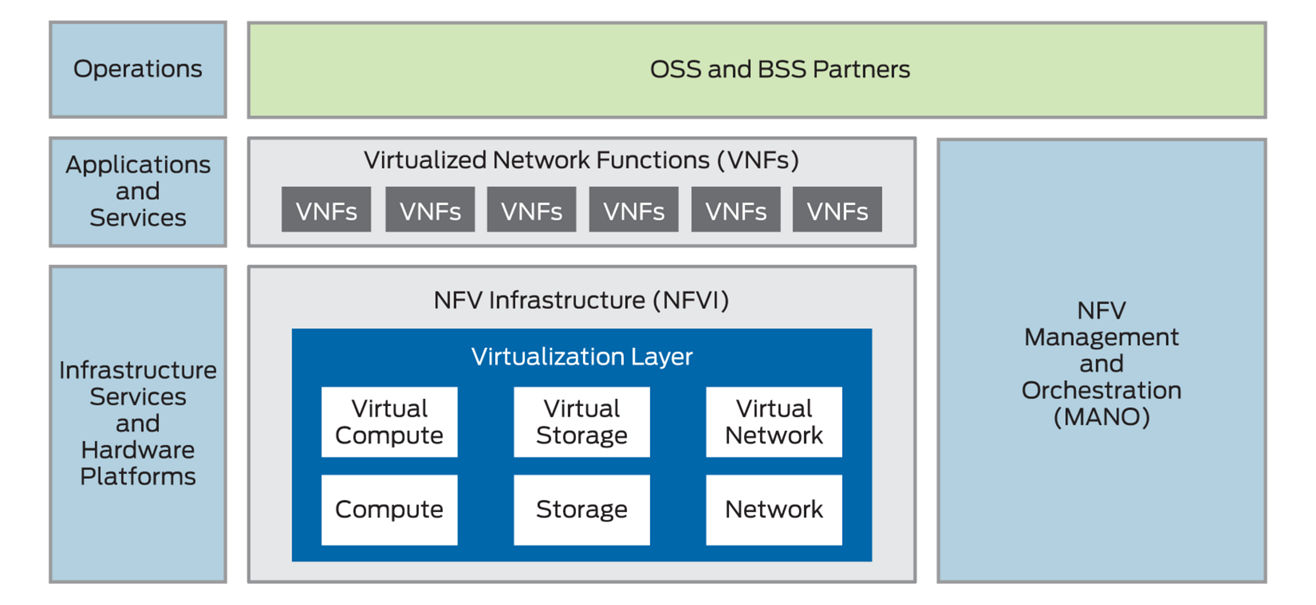

. What is NFV (Network Functions Virtualization)?

Definition: NFV is a network architecture concept that uses virtualization technology to manage and deliver network services. Instead of relying on specialized hardware appliances (like firewalls, routers, and load balancers), NFV uses software running on general-purpose hardware to perform these functions.

Key Idea: NFV decouples network functions from proprietary hardware and virtualizes them, allowing them to run on standard virtual machines in the cloud or data centers.

2. Key Features of NFV

Virtualization of Network Functions:

- NFV virtualizes network services such as firewalls, routers, load balancers, and intrusion detection systems.

Reduced Hardware Dependency:

- Unlike traditional networking, which relies on specific hardware appliances, NFV reduces the dependency on expensive, dedicated hardware.

Scalability:

- NFV allows network functions to scale easily. New virtual instances can be spun up as demand increases, and resources can be dynamically allocated or deallocated.

Flexibility:

- Network services can be deployed, upgraded, or moved easily within the network infrastructure.

Cost Efficiency:

- By using standard servers, NFV reduces the need for costly hardware and improves operational efficiency.

4. NFV vs. Traditional Networking

| Aspect | NFV (Network Functions Virtualization) | Traditional Networking |

|---|---|---|

| Network Functions | Virtualized and software-based | Hardware-based (specialized devices) |

| Scalability | Dynamic and elastic (can scale on-demand) | Limited scalability (hardware-bound) |

| Cost Efficiency | Reduced capital expenditure on hardware | Higher cost due to specialized hardware |

| Deployment | Fast deployment with virtual machines or containers | Slow deployment, requires physical setup |

4. Key Components of NFV

Virtual Network Functions (VNFs): These are the software implementations of network functions (e.g., firewall, router, load balancer).

NFV Infrastructure (NFVI): This includes the hardware and software resources that support the VNFs, such as servers, storage, and network resources.

NFV Orchestrator (NFVO): It coordinates the provisioning, configuration, and management of VNFs and NFVI resources across the network.

Virtualized Infrastructure Manager (VIM): Manages the NFVI, including resource allocation and ensuring the availability of the virtualized network functions.

5. Real-Life Example of NFV in Action

Telecom Industry (e.g., AT&T):

- AT&T has adopted NFV to improve network flexibility and reduce costs. NFV allows AT&T to deliver services such as virtual firewalls, virtual routers, and load balancing without the need for expensive physical devices.

- By virtualizing network functions, AT&T can scale its infrastructure more quickly and deploy new services faster to meet customer demand.

Cloud Providers (e.g., AWS, Google Cloud):

- Many cloud service providers use NFV to offer scalable network services like virtual firewalls and load balancing. This makes it easier for enterprises to deploy network functions dynamically in their cloud environments without worrying about hardware limitations.

6. Benefits of NFV

- Cost Reduction: Significantly lowers capital expenditure and operational costs by replacing physical network appliances with virtualized instances.

- Scalability: Easily scale network functions up or down based on demand.

- Flexibility: Quickly deploy new network functions and services in the cloud without needing physical hardware.

- Automation: Supports automation in provisioning, scaling, and managing network services.

Conclusion

- NFV is an innovative approach to virtualizing network services, making networks more agile, cost-effective, and scalable.

- When combined with SDN (Software-Defined Networking), which automates and centralizes network management, NFV enables organizations to move their entire network infrastructure into the cloud, offering greater efficiency and flexibility.

the types of Cloud Networking:

- Public Cloud Networking

- Private Cloud Networking

- Hybrid Cloud Networking

- Virtual Cloud Networking

- Software-Defined Networking (SDN) in Cloud

- Cloud-Based VPN (Virtual Private Network)

- Cloud Interconnection

- Cloud CDN (Content Delivery Network)

- Multi-Cloud Networking

- Cloud Direct Connect

11 Security in Cloud Infrastructure

LINK ; security in cloud infrastructure

Security in Cloud Infrastructure (Short Version)

Cloud security ensures the protection of data, applications, and services in the cloud. Key areas include:

1. Key Aspects of Cloud Security

- Data Protection: Encryption of data both at rest and in transit.

- Identity and Access Management (IAM): Using MFA and role-based access control (RBAC) to manage user access.

- Network Security: Protecting networks with firewalls, VPNs, and intrusion detection.

- Compliance: Ensuring adherence to regulations like GDPR, HIPAA.

- Incident Response: Real-time monitoring and rapid response to security threats.

- Backup & Disaster Recovery: Ensuring data is backed up and recoverable.

2. Cloud Security Models

- Shared Responsibility Model: The cloud provider secures the infrastructure; customers secure their data and apps.

- CASB: Enforces security policies for data between on-prem and cloud.

3. Real-Life Examples

- AWS: Shared responsibility model and tools like IAM, Shield (DDoS protection).

- Google Cloud: Encryption of data with AES-256.

- Azure: MFA for securing access to cloud services.

4. Security Best Practices

- Use Encryption: Encrypt data at all times.

- Implement Strong IAM: Use least privilege and MFA.

- Monitor and Audit: Use tools like CloudTrail (AWS) for continuous monitoring.

- Backup and Disaster Recovery: Regular backups and a recovery plan.

Conclusion

Cloud security is essential for protecting data and applications. Understanding key features like encryption, IAM, and real-time monitoring, alongside using tools from cloud providers, is critical for secure cloud infrastructure.

the types of Security in Cloud Infrastructure:

- Data Security

- Identity and Access Management (IAM)

- Network Security

- Application Security

- Compliance and Legal Security

- Endpoint Security

- Disaster Recovery and Backup Security

- Encryption and Key Management

- Multi-Factor Authentication (MFA)

- Cloud Security Posture Management (CSPM)

- Cloud Access Security Broker (CASB)

- Security Monitoring and Incident Response

- DDoS Protection

- Virtual Private Network (VPN) Security

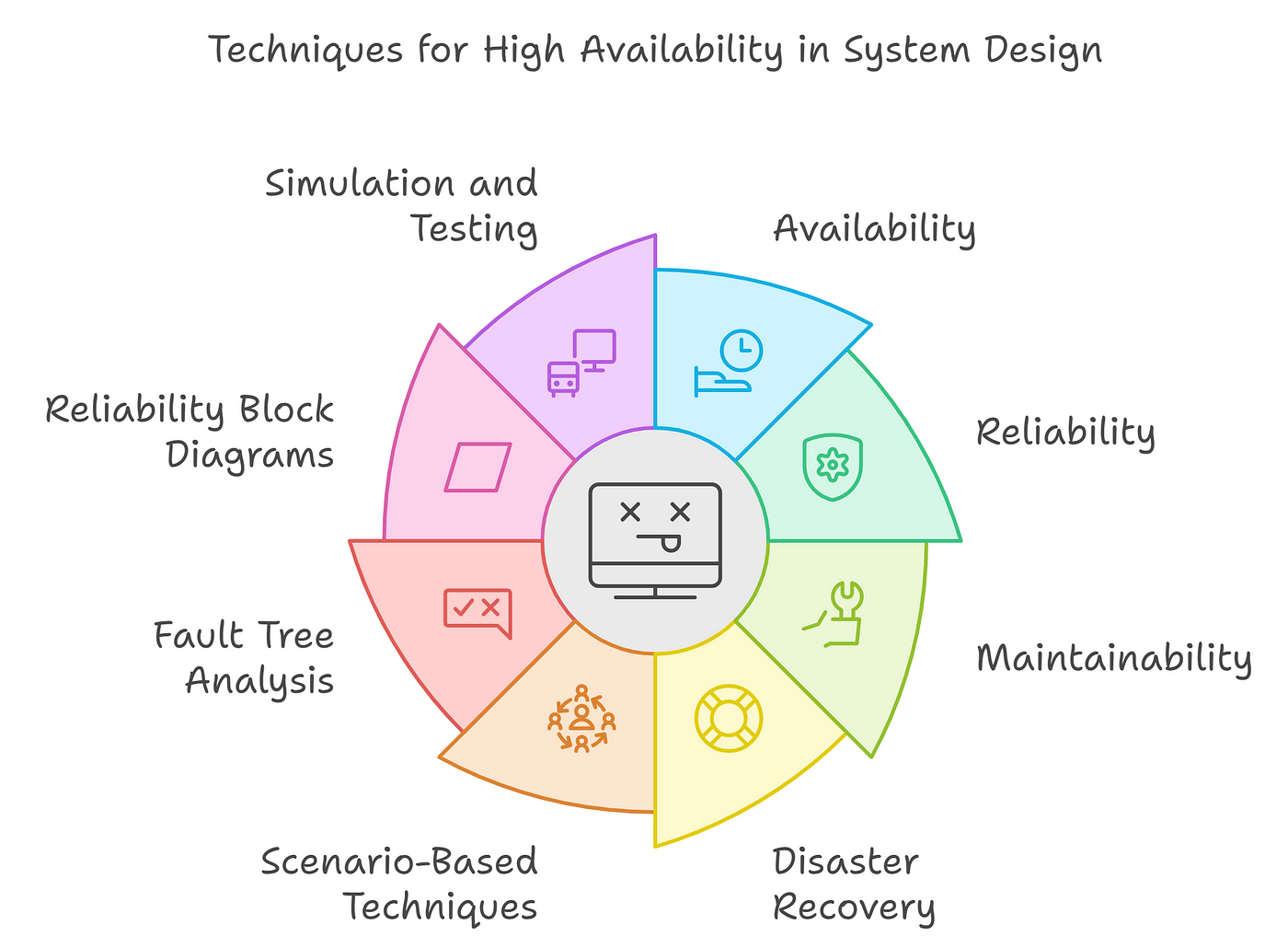

12 High Availability and Disaster Recovery

LINK ; ARCHITECT

High Availability (HA) and Disaster Recovery (DR) are essential IT strategies to ensure continuous operation and quick recovery in case of failures or disasters.

High Availability (HA):

- Definition: Systems remain accessible with minimal downtime.

- Key Points: Redundancy, automatic failover, and load balancing.

- Example: Amazon EC2 Auto Scaling ensures availability by redirecting traffic to healthy servers if one fails.

Disaster Recovery (DR):

- Definition: Focuses on restoring systems after a major event, like a cyberattack or natural disaster.

- Key Points: Backup, replication, recovery time objective (RTO), and recovery point objective (RPO).

- Example: Google Cloud Storage replicates data across regions for quick recovery.

Differences:

- HA: Minimizes downtime.

- DR: Focuses on recovery from major events.

Best Practices: Automated failover, geographically distributed infrastructure, regular backups, and cloud-based DR solutions.

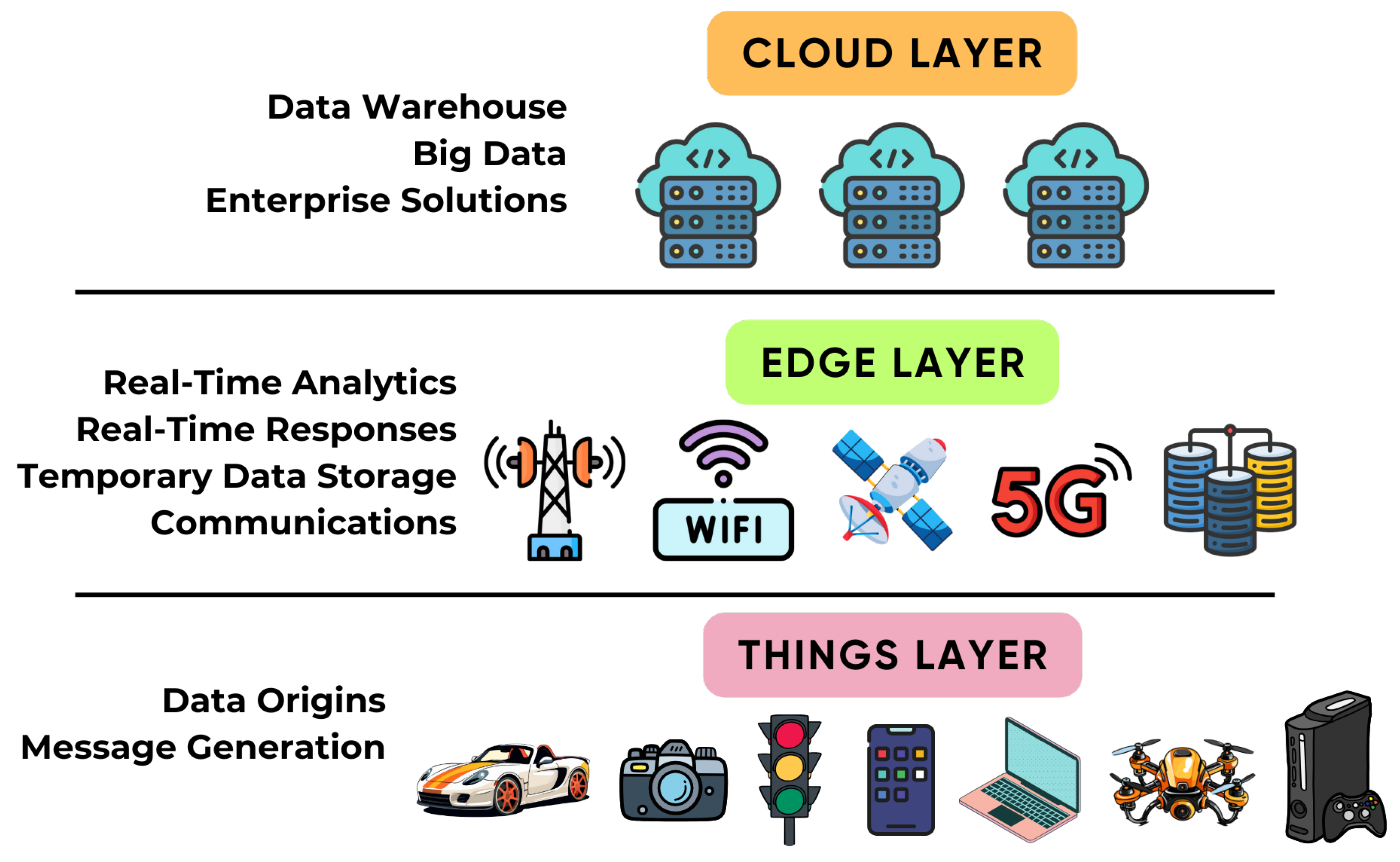

13 Emerging Cloud Technologies (Edge Computing, Fog Computing)

LINK : Emerging Cloud

Emerging Cloud Technologies: Edge Computing & Fog Computing are gaining significant importance due to the rise of IoT, low-latency applications, and large-scale data processing needs. These technologies aim to improve performance and reduce latency by moving computing closer to the source of data.

1. Edge Computing

Definition:

Edge Computing involves processing data closer to the data source (e.g., IoT devices, sensors) rather than sending it to a central cloud or data center. This helps reduce latency, bandwidth usage, and improves real-time decision-making.

Key Points:

- Proximity to Data: Data is processed at the "edge" (local devices, sensors), rather than relying on distant cloud data centers.

- Low Latency: Ideal for time-sensitive applications that require real-time processing, such as autonomous vehicles, smart cities, and industrial automation.

- Bandwidth Efficiency: Reduces the need for large-scale data transfers to the cloud, making it more bandwidth-efficient.

Real-life Example:

Autonomous Vehicles (e.g., Tesla)

- Tesla uses Edge Computing to process data from sensors and cameras in real-time. The car’s onboard computer analyzes data to make split-second decisions, like braking or changing lanes, without relying on the cloud.

Smart Cities (e.g., Barcelona)

- Barcelona uses Edge Computing in smart streetlights and traffic sensors to process data locally, optimizing traffic flow, street lighting, and energy consumption in real-time without needing constant cloud communication.

2. Fog Computing

Definition:

Fog Computing extends Edge Computing by creating a distributed computing layer between the cloud and edge devices. It processes data at the local level, but also integrates with a cloud infrastructure to support more complex analytics and storage when needed.

Key Points:

- Intermediate Layer: It sits between the cloud and the edge, offering localized data processing while allowing for larger-scale cloud integration.

- Scalability: Fog can handle large data flows, enabling scalable deployments like smart cities, industrial IoT, and large-scale sensor networks.

- Real-time Analytics: Fog Computing allows real-time analysis of data in remote locations with low latency.

Real-life Example:

Industrial IoT (e.g., GE Predix)

- General Electric (GE) uses Fog Computing in their Predix platform for industrial IoT. Sensors on industrial equipment collect data, which is processed locally via fog nodes for real-time decisions. Complex data analysis is then sent to the cloud for deeper insights.

Smart Manufacturing (e.g., Siemens)

- Siemens uses Fog Computing in smart factories, where local sensors monitor production lines. Data is processed at the factory floor (via fog nodes), enabling immediate adjustments and reducing reliance on distant data centers.

Key Differences Between Edge and Fog Computing:

| Aspect | Edge Computing | Fog Computing |

|---|---|---|

| Processing Location | Data is processed directly at the device or edge node. | Data is processed at intermediate nodes between the edge and cloud. |

| Latency | Very low latency, ideal for real-time processing. | Low latency but higher than Edge Computing. |

| Network Traffic | Reduces the need for data transfer to the cloud. | Reduces traffic to the cloud, but still uses cloud for complex tasks. |

| Scalability | Limited by the capacity of edge devices. | More scalable as it can use intermediate fog nodes to handle more data. |

Emerging Use Cases for Edge & Fog Computing:

Autonomous Vehicles:

- Edge Computing is crucial for processing data in real-time to make decisions (e.g., Tesla’s autopilot).

Smart Cities & Traffic Management:

- Edge and Fog Computing help optimize traffic flow by processing data locally from sensors and cameras (e.g., Barcelona).

Industrial Automation:

- Fog Computing helps monitor and analyze machinery performance locally and in real-time (e.g., General Electric’s Predix platform in factories).

Healthcare:

- Edge Computing can process patient data in real-time (e.g., wearable health devices). Fog nodes might aggregate data for broader analytics.

Conclusion:

- Edge Computing focuses on data processing at the device level, reducing latency and bandwidth.

- Fog Computing extends this idea by adding an intermediate layer to integrate with the cloud for more complex analytics.

- Both technologies are pivotal in industries like automotive, manufacturing, healthcare, and smart cities, enabling real-time data processing and decisions with minimal reliance on centralized cloud infrastructure.

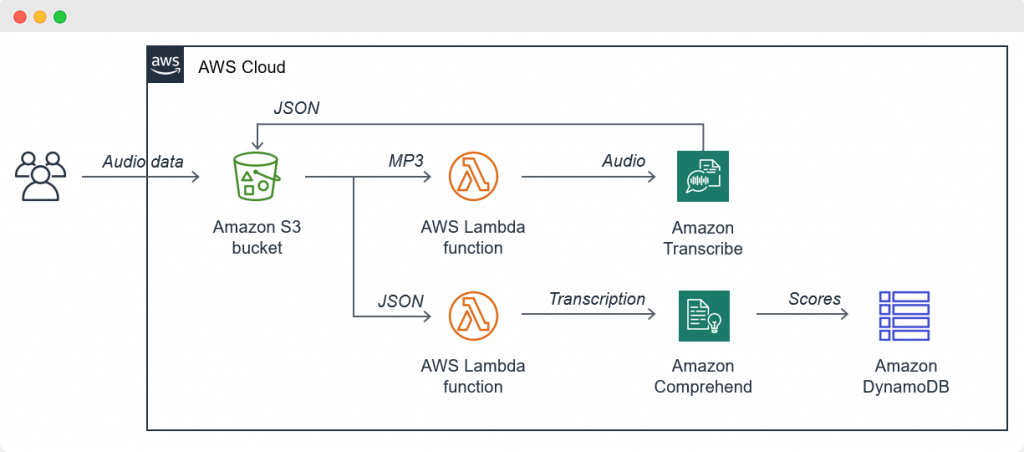

14 Serverless Computing

LINK ; Serverless Computing

Serverless Computing

Definition:

Serverless computing allows developers to run applications without managing servers. The cloud provider automatically handles infrastructure, scaling, and resource management.

Key Points:

- No Server Management: Developers focus on code, not infrastructure.

- Event-Driven: Functions are triggered by events (e.g., HTTP requests, file uploads).

- Automatic Scaling: Resources scale based on demand.

- Pay-As-You-Go: Pay only for the resources used, based on execution time.

- Quick Deployment: Ideal for microservices and rapid application deployment.

Types of Serverless Computing:

Function-as-a-Service (FaaS):

- Definition: Execute small, event-driven functions.

- Example: AWS Lambda, Google Cloud Functions.

Backend-as-a-Service (BaaS):

- Definition: Use managed backend services (e.g., databases, authentication) without managing infrastructure.

- Example: Firebase, AWS Amplify.

Real-Life Examples:

AWS Lambda (FaaS):

Netflix uses Lambda for video processing, scaling automatically based on demand.Google Firebase (BaaS):

The New York Times uses Firebase for real-time data synchronization across devices in their mobile app.

Serverless computing is perfect for dynamic, event-driven applications with variable traffic.

Comments

Post a Comment